Abdulaziz Alaboudi

Software engineering Ph.D student

George Mason University

Office: 4404 Engineering Building

Email: aalaboud@gmu.edu

Education

- Ph.D in information technology, concentration in software engineering from George Mason University, VA, United States of America

2017-present current GPA 4/4.

- Master's of science in software engineering from George Mason University, VA, United States of America

2014-2016 Graduated with distinction.

- Bachelor's of science in software engineering from King Saud University, Riyadh, Saudi Arabia

2007-2011 Graduated with honors.

Work Experience

- Graduate research assistant at the Developer Experience Design Lab (DevX)

Spring 2018-present

- Graduate teaching assistant at George Mason University

Fall 2017.

Courses:- Object-Oriented Software Spec. & Construction (Graduate level course)

- Design and Implementation of Software for the Web (Undergrad level course)

- Teaching assistant at King Saud University

Spring 2011, Fall 2016 and Spring 2017.

Courses:- Human Computer Interaction.

- Web Development for Software Engineers.

- Software Quality Assurance.

- National Information Center (NIC)

2011 summer internship as software quality engineer.

View my full CV.

Selected Publications

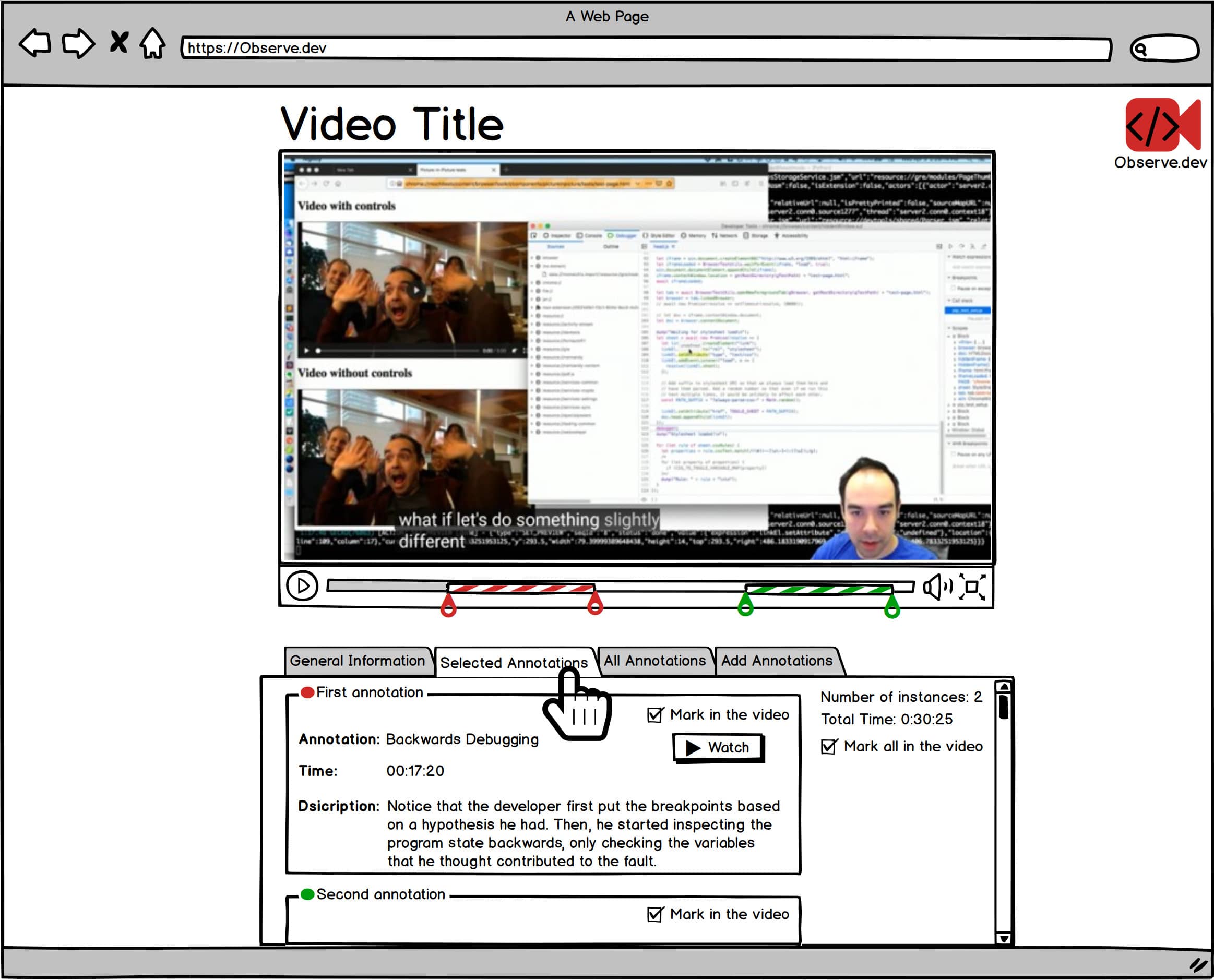

A. Alaboudi and T. D. LaToza, “Supporting software engineering research and education by annotating public videos of developers programming,” in Proceedings of the ICSE Workshop on Cooperative and Human Aspects of Software Engineering, 2019

Software engineering has long studied how software developers work, building a body of work which forms the foundation of many software engineering best practices, tools, and theories. Recently, some developers have begun recording videos of themselves engaged in programming tasks contributing to open source projects, enabling them to share knowledge and socialize with other developers. We believe that these videos offer an important opportunity for both software engineering research and education. In this paper, we discuss the potential use of these videos as well as open questions for how to best enable this envisioned use. We propose creating a central repository of programming videos, enabling analyzing and annotating videos to illustrate specific behaviors of interest such as asking and answering questions, employing strategies, and software engineering theories. Such a repository would offer an important new way in which both software engineering researchers and students can understand how software developers work.

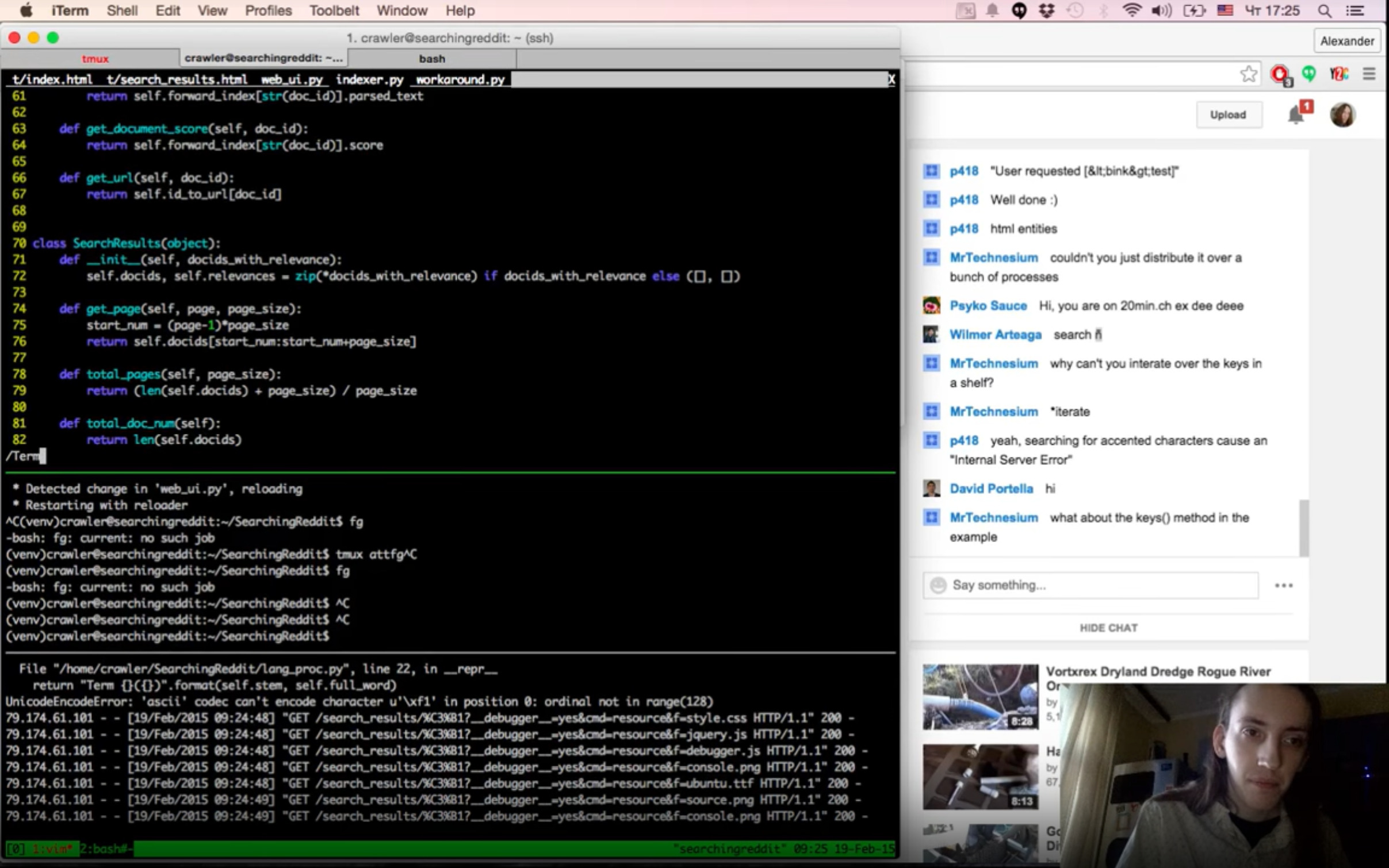

A. Alaboudi and T. D. LaToza, “An Exploratory Study of Live-Streamed Programming” in 2019 IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC)

In live-streamed programming, developers broadcast their development work on open source projects using streaming media such as YouTube or Twitch. Sessions are first announced by a developer acting as the streamer, inviting other developers to join and interact as watchers using chat. To better understand the characteristics, motivations, and challenges in live-streamed programming, we analyzed 20 hours of live-streamed programming videos and surveyed 7 streamers about their experiences. The results reveal that live-streamed programming shares some of the characteristics and benefits of pair programming, but differs in the nature of the relationship between the streamer and watchers. We also found that streamers are motivated by knowledge sharing, socializing, and building an online identity, but face challenges with tool limitations and maintaining engagement with watchers. We discuss the implications of these findings, identify limitations with current tools, and propose design recommendations for new forms of tools to better supporting live-streamed programming.

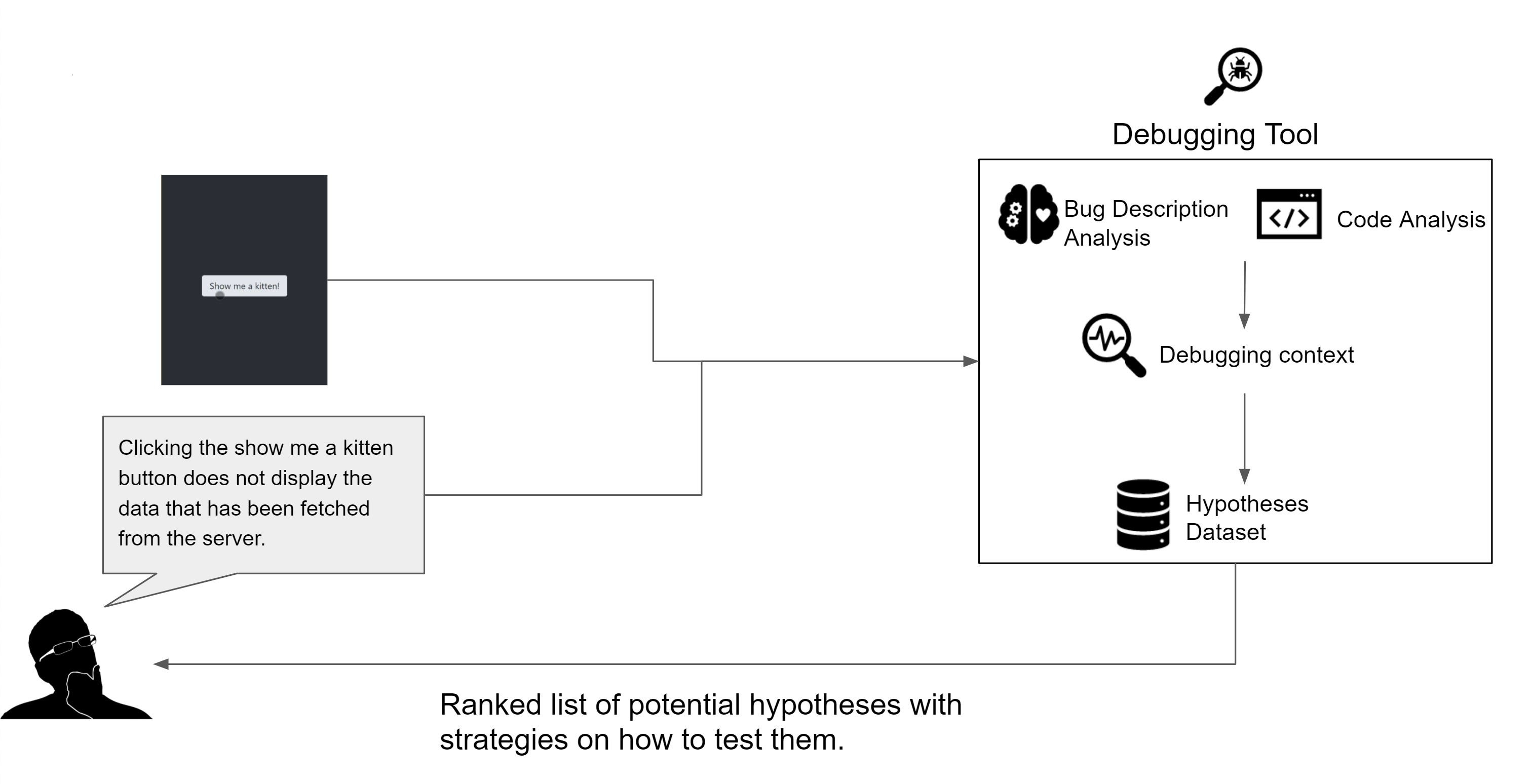

A. Alaboudi aand T. D. LaToza, “Using Hypotheses as a Debugging Aid” in 2020 IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC)

As developers debug, developers formulate hypotheses about the cause of the defect and gather evidence to test these hypotheses. To better understand the role of hypotheses in debugging, we conducted two studies. In a preliminary study, we found that, even with the benefit of modern internet resources, incorrect hypotheses can cause developers to investigate irrelevant information and block progress. We then conducted a controlled experiment where 20 developers debugged and recorded their hypotheses. We found that developers have few hypotheses, two per defect. Having a correct hypothesis early strongly predicted later success. We also studied the impact of two debugging aids: fault locations and potential hypotheses. Offering fault locations did not help developers formulate more correct hypotheses or debug more successfully. In contrast, offering potential hypotheses made developers six times more likely to succeed. These results demonstrate the potential of future debugging tools that enable finding and sharing relevant hypotheses.

🥇Best Paper Award🥇

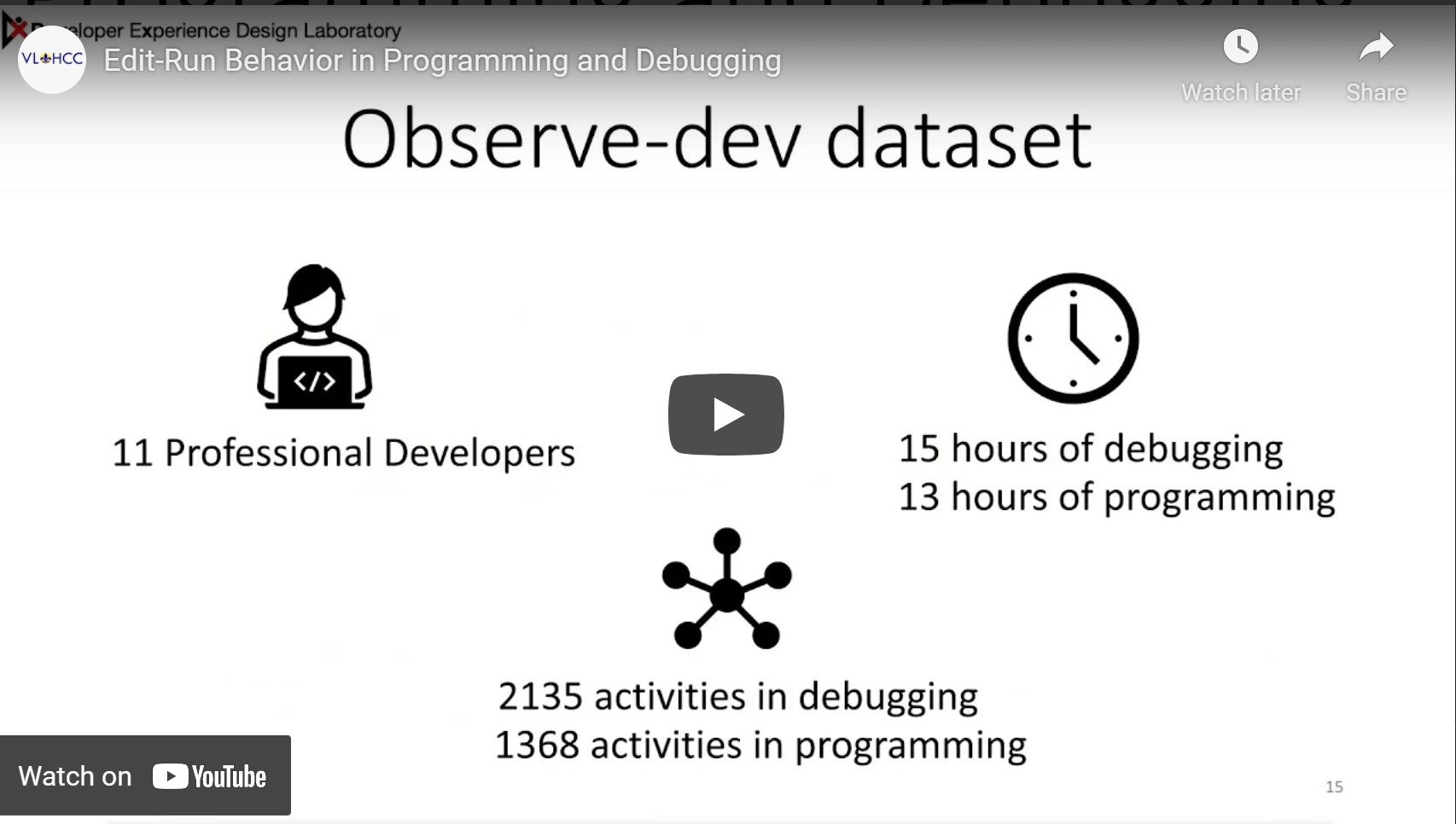

A. Alaboudi and T. D. LaToza, “Edit-Run Behavior in Programming and Debugging” in 2021 IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC)

As developers program and debug, they continuously edit and run their code, a behavior known as edit-run cycles. While techniques such as live programming are intended to support this behavior, little is known about the characteristics of edit-run cycles themselves. To bridge this gap, we analyzed 28 hours of programming and debugging work from 11 professional developers which encompassed over three thousand development activities. We mapped activities to edit or run steps, constructing 581 debugging and 207 programming edit-run cycles. We found that edit-run cycles are frequent. Developers edit and run the program, on average, 7 times before fixing a defect and twice before introducing a defect. Developers waited longer before again running the program when programming than debugging, with a mean cycle length of 3 minutes for programming and 1 minute for debugging. Most cycles involved an edit to a single file after which a developer ran the program to observe the impact on the final output. Edit-run cycles which included activities beyond edit and run, such as navigating between files, consulting resources, or interacting with other IDE features, were much longer, with a mean length of 5 minutes, rather than 1.5 minutes. We conclude with a discussion of design recommendations for tools to enable more fluidity in edit-run cycles.